Introduction

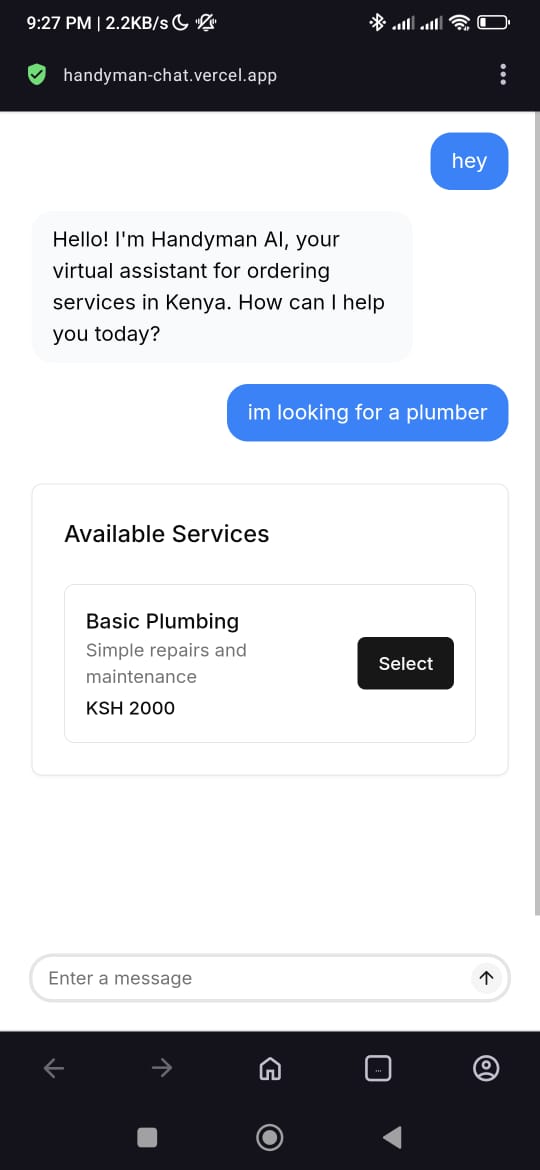

The Handyman AI Assistant is a proof-of-concept project born from the desire to explore the capabilities of Generative UI and AI-driven conversational interfaces in the service industry. Inspired by my previous work on the Bingwa app, I aimed to create a platform that simplifies service ordering through natural language interaction and generative user interfaces.

The Problem

Traditional service ordering often involves navigating complex menus, filling out lengthy forms, and waiting for manual confirmations. This can be a frustrating and time-consuming process for users. The goal was to streamline this by allowing users to simply "talk" to an AI assistant to book services.

The Solution: AI-Powered Conversational Ordering

The Handyman AI Assistant addresses these challenges by integrating a powerful AI assistant that understands natural language and dynamically generates UI components based on the conversation context.

Key Architectural Components:

-

System Prompt: The system prompt is crucial for guiding the AI's behavior. It sets the context and provides instructions on how to use the available tools.

// System prompt from app/api/chat/route.ts system: `You are Handyman AI, a helpful assistant for ordering services in Kenya. Guide users through the process of identifying services, selecting providers, creating orders, and processing payments. Use the appropriate tools based on the user's request and the current stage of the ordering process. When listing services, use the 'listServices' tool The 'listServices' tool accepts an optional 'category' (string) listServices({ category: 'cleaning'} }) If the user provides the category, use it to filter the services. Otherwise, use 'resolveVariant' tool to navigate to the variant selection.Example: resolveVariant({ serviceId: 'plumbing' }) If the user does not provide a category, call the 'listServices' tool without any parameters to show all available services. Example: listServices({}). If no services are found, inform the user that no services are available. ` -

Server (Backend - Vercel AI SDK & Google's Gemini 2.5-Flash):

- Leverages the Vercel AI SDK to connect with Google's Gemini 2.5-Flash model.

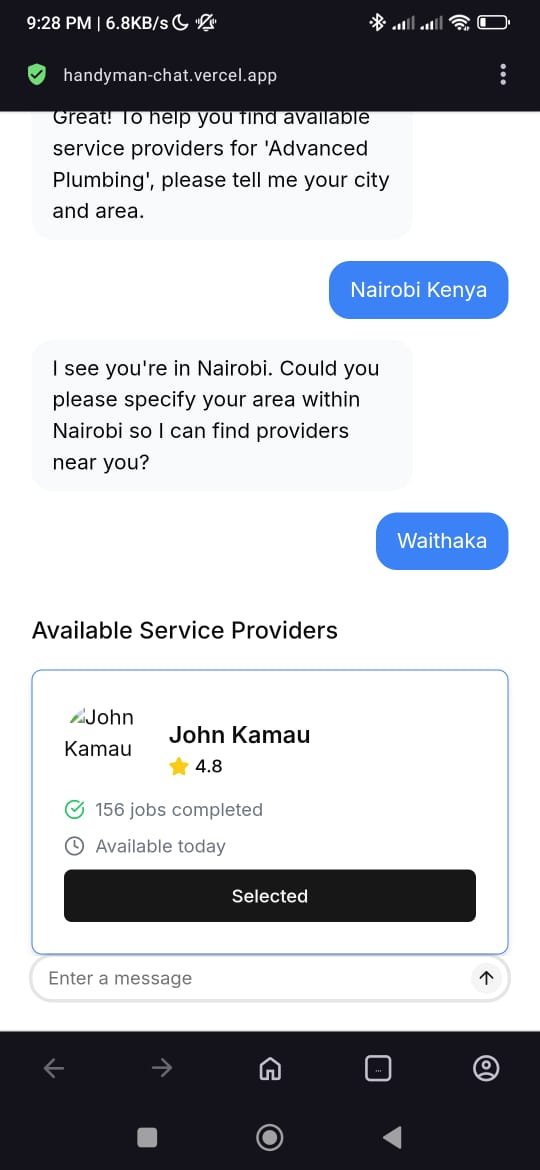

- A modular system of tools (e.g.,

listServices,collectUserDetails,createOrder,processPayment) powers the conversation and workflow logic. - The AI system prompt instructs the model on the precise usage of these tools, including examples and conditional logic, enabling it to manage the entire service ordering lifecycle.

// Example: A simplified tool definition from app/lib/tools.ts import { z } from 'zod'; import { tool } from 'ai'; // List Available Services Tool export const listServices = tool({ name: 'listServices', description: 'Lists all available services with their variants', parameters: z.object({ category: z.string().optional().describe('Optional category to filter services'), }), execute: async ({ category }) => { return { message: `Here are the available ${category ? category : ''} services:`, services: category ? mockServices.filter(s => s.category === category) : mockServices }; }, }); -

Chat Interface (Frontend - Next.js & Shadcn UI):

- Built with Next.js for server-side rendering and a fast user experience.

- Utilizes Shadcn UI components for a modern and responsive design.

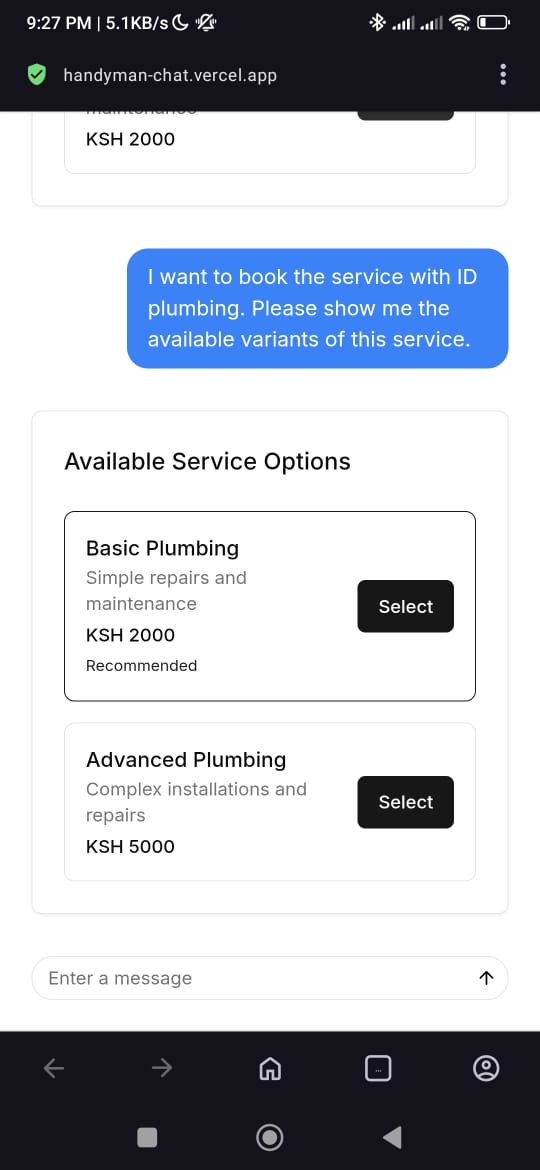

- The

chat-formcomponent dynamically renders UI elements (likeBookingScheduleorReviewForm) based on the AI's tool calls, providing a truly generative UI experience.

// Example: Dynamic UI rendering in chat-form const renderDynamicComponent = (message: Message) => { // Check for service selection if (message.role === 'assistant' && message.toolInvocations?.some( (t: { toolName: string; state: string; }) => t.toolName === 'listServices' && t.state === 'result' )) { const toolResult = message.toolInvocations.find( (t: { toolName: string; }) => t.toolName === 'listServices' )?.result; if (toolResult?.services?.length > 0) { return ( <div className="my-4 w-full"> <div>{toolResult.message || ''}</div> <br /> <ServiceSelectionCard services={toolResult.services} onSelect={(serviceId) => { append({ content: `I want to book the service with ID ${serviceId}. Please show me the available variants of this service.`, role: 'user' }); }} /> </div> ); } else { return ( <div className="my-4 w-full"> <p>No services available at the moment for the selected category. Would you like to try a different category?</p> </div> ); } } // ... more component rendering logic -

External Integrations:

- Service Provider Databases & Order Management Systems: Although mocked for this proof-of-concept, the architecture is designed to easily integrate with real external systems.

Vercel AI SDK vs. The Model Context Protocol (MCP)

The Vercel AI SDK is a powerful library for building AI-powered applications. It provides a set of tools and utilities that simplify the process of integrating with various AI models and services. However, a new industry standard, the Model Context Protocol (MCP), is emerging as a more flexible and extensible alternative.

MCP is a standardized protocol for communication between AI models and external tools and services. It provides a unified interface for models to interact with a wide range of data sources and APIs, making it easier to build complex, multi-modal applications.

While the Vercel AI SDK is an excellent choice for building applications with a limited set of tools and integrations, MCP offers a more scalable and future-proof solution. As the Handyman Ordering System evolves, migrating to MCP would allow for greater flexibility in adding new tools, services, and data sources.

Roadmap to Production

As a proof-of-concept, the Handyman Ordering System currently utilizes mocked data for several key functionalities, including order creation, payment processing, and service provider interactions. It is not yet connected to a persistent database, and essential features such as user authentication and conversational memory are yet to be implemented.

The roadmap to full production readiness includes:

- Database Integration: Connecting the system to a robust database for persistent storage of services, orders, user data, and more.

- Real-time API Integration: Replacing mocked data with actual API calls to payment gateways (like M-Pesa), service provider management systems, and other external services.

- User Authentication: Implementing secure user registration and login to personalize experiences and manage user-specific data.

- Conversational Memory: Enhancing the AI to remember past interactions and user preferences for a more coherent and efficient conversation flow.

- Error Handling and Robustness: Implementing comprehensive error handling and edge case management for a production-grade application.

- Scalability and Performance: Optimizing the application for high traffic and ensuring a smooth experience for a large user base.

Live Demo and Source Code

You can try out the live demo of the Handyman AI Assistant here: Live Demo

The complete source code for this project is available on GitHub: Source Code

Conclusion

The Handyman AI Assistant stands as a testament to the power of combining modern web development frameworks with advanced AI capabilities. It showcases a future where user interfaces are not just static forms but dynamic, intelligent conversational partners that streamline complex tasks. This proof-of-concept lays a strong foundation for building truly intuitive and efficient service platforms.